World knowledge from Cisco highlights a worrying pattern, the place Australian organizations are starting to fall right into a “belief hole” between what prospects anticipate to do with knowledge and privateness and what’s truly taking place.

New knowledge reveals that 90% of individuals wish to see organizations remodel to higher handle knowledge and danger. With a regulatory setting that has fallen behind, for Australian organizations to ship this, they should transfer sooner than the regulatory setting.

Australian companies have to take it significantly

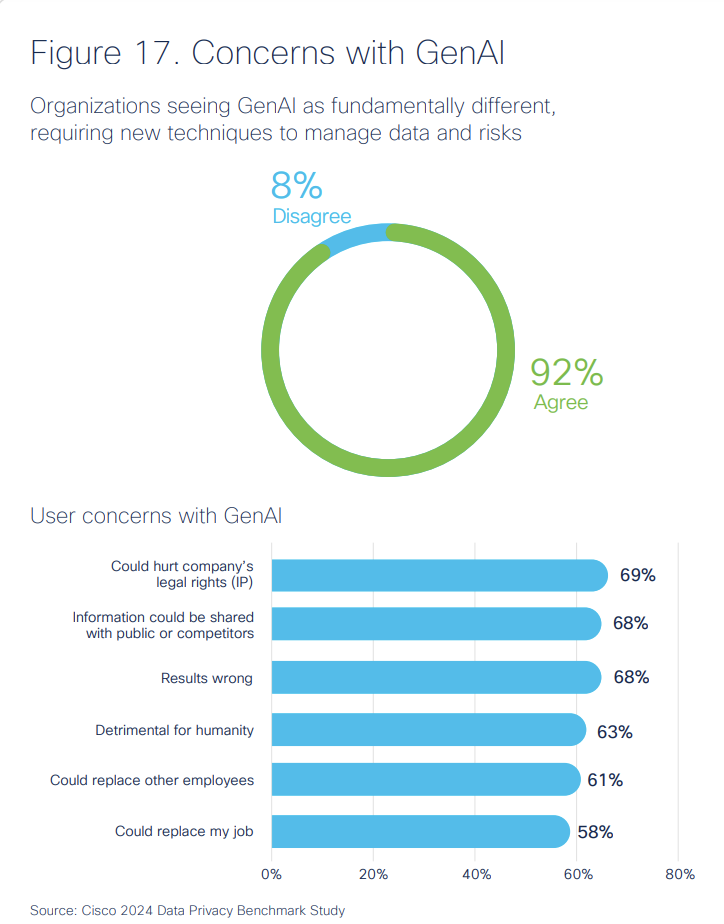

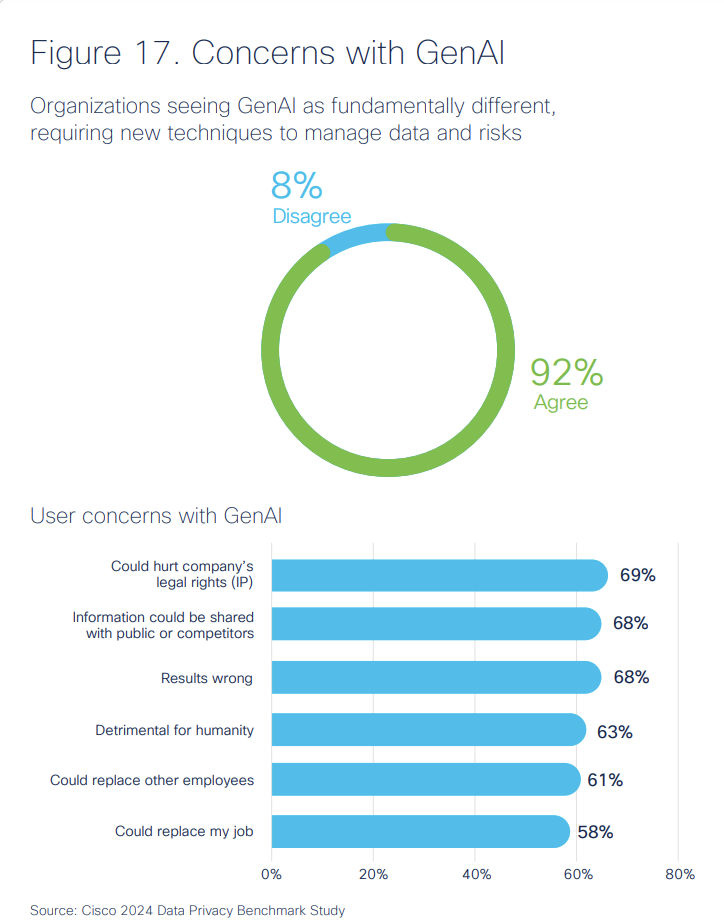

Based on a current Cisco international examine, greater than 90% of individuals imagine that generative AI requires new strategies to handle knowledge and danger (Determine A). In the meantime, 69% are involved with the potential for authorized and IP rights to be compromised, and 68% are involved in regards to the danger of disclosure to the general public or rivals.

Primarily, whereas prospects admire the worth that AI can convey to them by way of personalization and repair ranges, they’re additionally uncomfortable with the implications to their privateness if their knowledge is used as a part of AI fashions.

PREMIUM: Australian organizations ought to take into account an AI ethics coverage.

About 8% of individuals within the Cisco survey have been from Australia, though the examine doesn’t share the above issues by territory.

Australians usually tend to breach knowledge safety insurance policies regardless of knowledge privateness issues

One other analysis reveals that Australians are notably delicate to the way in which organizations use their knowledge. Based on analysis by Quantum Market Analysis and Porter Novelli, 74% of Australians are involved about cybercrime. As well as, 45% are frightened about their monetary data being taken, and 28% are frightened about their id paperwork, equivalent to passports and driver's licenses (Determine B).

Nevertheless, Australians are additionally twice as probably as the worldwide common to breach knowledge safety insurance policies at work.

As Gartner VP Analyst Nader Henein mentioned, organizations ought to be very involved about this breach of buyer belief as a result of prospects shall be fairly glad to take their wallets and stroll away.

“The very fact is that customers immediately are more than pleased to cross the street to the competitors and, in some instances, pay a premium for a similar service, if that's the place they imagine their knowledge and the info of their household are higher taken care of,” Henein mentioned.

Voluntary regulation in Australian organizations will not be nice for knowledge privateness

A part of the issue is that, in Australia, legislation enforcement on knowledge privateness and AI is essentially voluntary.

SEE: What Australian IT leaders have to deal with forward of privateness reforms.

“From a regulatory perspective, most Australian companies are centered on breach disclosure and reporting, given all of the high-profile incidents within the final couple of years. However in relation to privateness elements core, there may be little requirement for firms in Australia. The primary pillars of privateness equivalent to transparency, shopper privateness rights and express consent are merely lacking,” Henein mentioned.

It's solely Australian organizations which have executed enterprise abroad and discover themselves in exterior regulation that want enchancment – Henein pointed to the GDPR and New Zealand's privateness legal guidelines as examples. Different organizations must make constructing belief with their prospects an inside precedence.

Construct belief in using knowledge

Whereas using knowledge in AI could also be largely unregulated and voluntary in Australia, there are 5 issues the IT crew can – and may – champion throughout the organisation:

- Transparency on knowledge assortment and use: Transparency round knowledge assortment could be achieved by clear and easy-to-understand privateness insurance policies, consent varieties and opt-out choices.

- Obligations with knowledge governance: Everybody within the group should acknowledge the significance of information high quality and integrity in knowledge assortment, processing and evaluation, and there should be insurance policies in place to implement the habits.

- Excessive knowledge high quality and accuracy: Information assortment and use should be correct, as misinformation could make AI fashions unreliable, which might then undermine belief in knowledge safety and administration.

- Proactive incident detection and response: An insufficient incident response plan can result in injury to a corporation's fame and knowledge.

- Buyer management over their knowledge: All providers and features that contain the gathering of information ought to permit the shopper to entry, handle and delete their knowledge on their phrases and when they need.

Self-regulate now to arrange for the long run

Presently, knowledge privateness legislation – together with knowledge collected and utilized in AI fashions – is ruled by previous laws created earlier than AI fashions have been even used. Due to this fact, the one regulation that Australian firms apply is self-determined.

Nevertheless, as Gartner's Henein mentioned, there may be quite a lot of consensus about the proper strategy to handle knowledge when it’s utilized in these new and transformative methods.

SEE: Australian organizations to deal with ethics of information assortment and use in 2024.

“In February 2023, the Privateness Act Overview Report was revealed with excellent suggestions geared toward modernizing knowledge safety in Australia,” Henein mentioned. “Seven months later in September 2023, the Federal Authorities responded. Of the 116 proposals within the authentic report, the federal government responded favorably to 106.”

For now, some managers and boards could resent the concept of self-imposed regulation, however the good thing about that is that a corporation that may display that it takes these steps will profit from a larger fame amongst prospects and be seen as taking their issues. round severe use knowledge.

In the meantime, some within the group could also be involved that imposing self-regulation may stifle innovation. As Henein mentioned in response to this: “Have you ever delayed the introduction of seat belts, crumple zones and airbags for worry of getting these elements decelerate the evolution within the automotive trade?”

Now could be the time for IT professionals to take cost and begin bridging that belief hole.