The Australian authorities sees the worth of generative AI in native, state and federal governments. Nevertheless, premature lengthy spending restrictions and issues about automation disasters are slowing the adoption of AI, at the very least when it comes to options for residents.

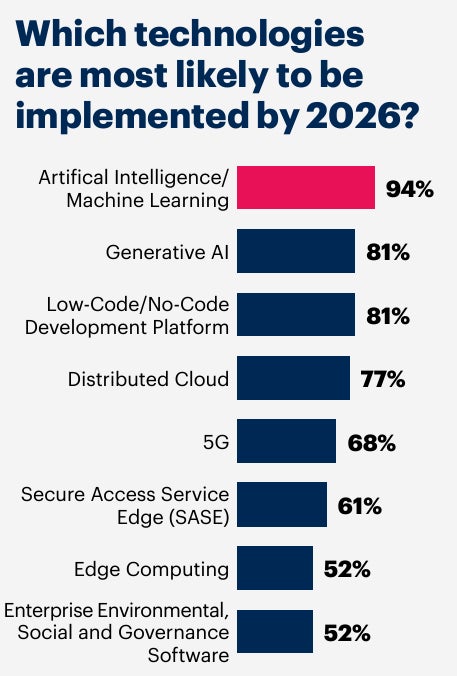

In accordance with a Gartner survey of CIOs in APAC governments (with Australia sitting proper in the course of the pattern), AI/machine studying and generative AI are the 2 largest priorities to be carried out by in 2026 (Determine A). Nevertheless, different pressures make authorities companies hesitant to undertake AI in areas that the Australian authorities considers to be critically necessary.

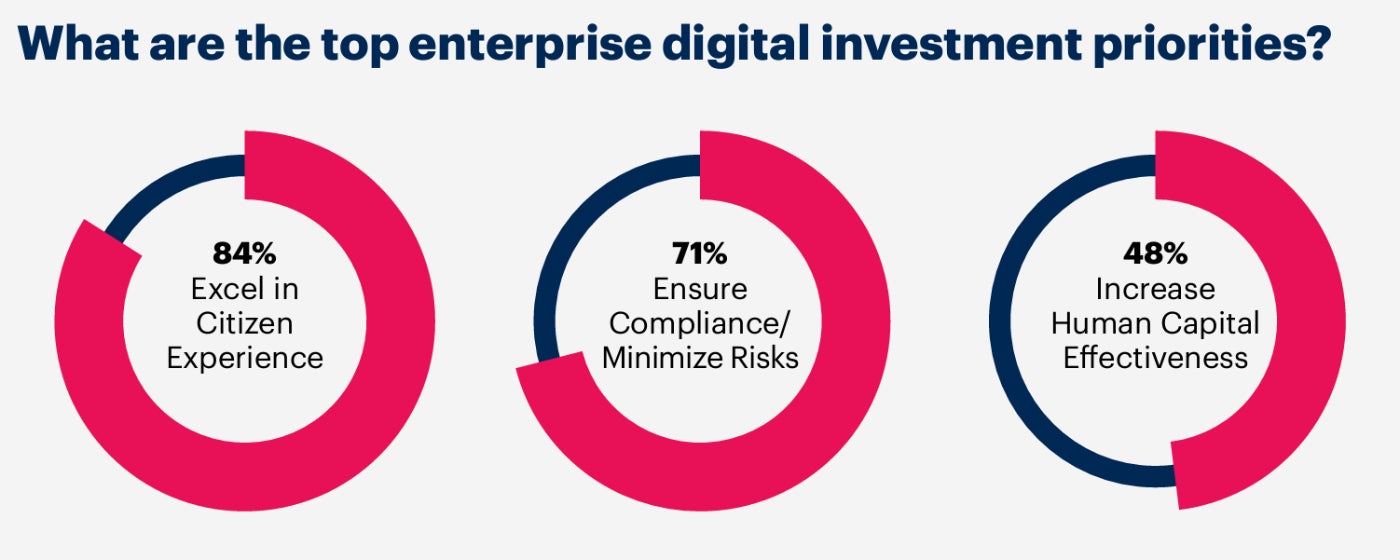

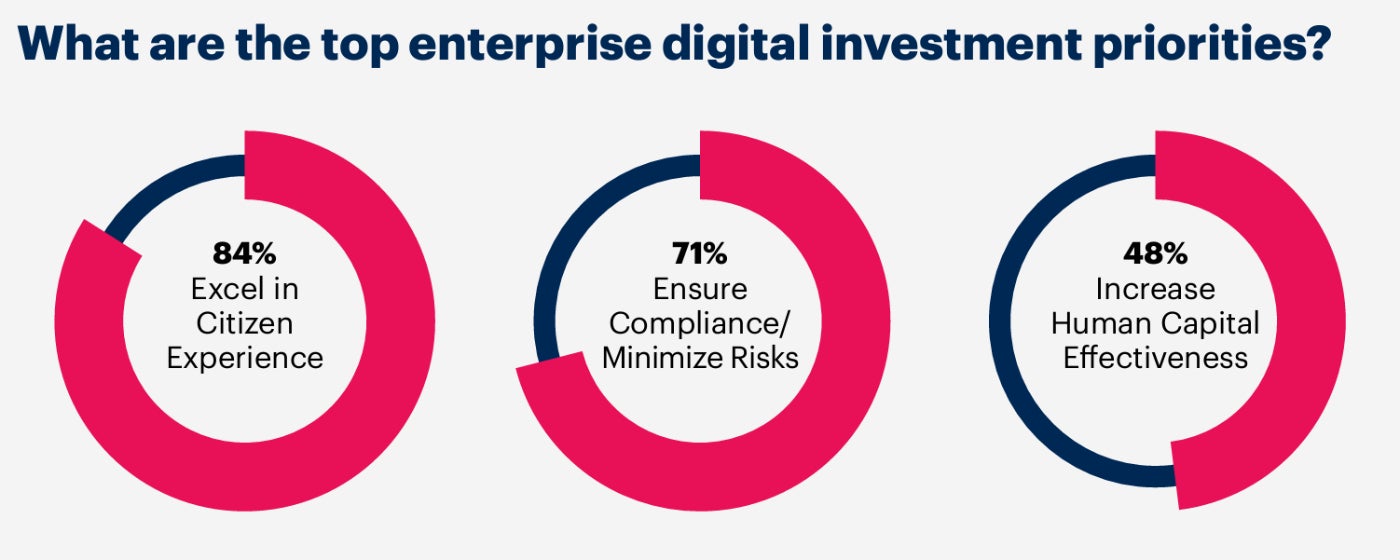

For instance, whereas Gartner analysis exhibits that 84% of CIOs contemplate investing to excel within the citizen expertise to be a prime precedence (Determine B), lower than 25% of presidency organizations may have generative companies for residents enabled by AI by 2027.

The Australian authorities's disconnect between the need to implement AI and the flexibility to ship

As Gartner VP Analyst Dean Lacheca stated in an interview with TechRepublic, there’s public skepticism round massive public studying fashions, with issues about privateness, safety and knowledge preparation impacting the velocity of adoption. AI. This can be a notably sticky drawback in Australia, the place automation, together with AI, in authorities companies has prompted materials harm. Consequently, there’s an inherent distrust for any purposes which might be perceived to automate interactions with residents all through the federal government.

Most notably, whereas not an software of AI, the “Robodebt” scandal that impacted Australians so considerably resulted in a Royal Fee following a change of presidency. The automation that was on the middle of that controversy has made many authorities companies hesitant to announce to the general public that they’re exploring using AI.

“This affiliation with automation is just not expressed, however the primary feeling is that authorities companies are conscious that there’s a lot of reputational danger right here in the event that they get it mistaken,” stated Lacheca. “There may be some frustration on the government stage as a result of they will't transfer sooner within the AI area, however there’s validity within the conservative strategy to the primary steps and to completely assume by the use instances.”

The tightening of budgets can also be impacting the adoption of AI by the Australian authorities

This conservatism can also be exacerbated by an extended austerity in authorities IT spending, which Lacheca stated has an impression on the forms of initiatives which have been greenlit. There may be an understanding of the necessity for funding, he stated, however the leaders who greenlight initiatives have a complete concentrate on productiveness, effectiveness and a quick ROI.

With AI being a brand new space for a lot of CIOs and their groups in authorities, and AI requiring transformation and new approaches to expertise, discovering after which articulating the appropriate initiatives that may ship rapidly might be difficult.

“As a result of the targets of the initiatives are typically comparatively modest, within the seek for that fast ROI, there’s additionally a bit of training that the IT groups want to have interaction with the chief,” stated Lacheca. “We frequently hear parts of frustration alongside the strains of 'my teenage son is at residence with ChatGPT, why make this extra difficult for us?'

“So, managing the expectations of what might be achieved with expertise given the emphasis on fast targets and overcoming the hesitation round citizen companies is a part of the method with the adoption of the “AI from the federal government now.”

Gartner's resolution: Deal with delivering inner purposes first

In accordance with Gartner, the answer to those challenges with the adoption of AI is to start out by offering purposes that aren’t going through residents, however that may assist productiveness positive aspects within the inner group. The “low-hanging” fruit permits authorities companies and departments to keep away from the perceived dangers related to AI in citizen companies, whereas constructing the crucial information and expertise which might be wanted to develop AI methods extra bold.

Businesses also needs to construct belief and mitigate related dangers by establishing clear AI governance and assurance for internally developed and purchased AI capabilities, Gartner's steering provides.

“There’s a lot that authorities organizations need to do to take steps in AI,” stated Lacheca. “For one use case, for instance, an company may need to summarize a set of knowledge, however that knowledge accommodates private info and possibly doesn't have the appropriate metadata tags. They could need to analyze that.

“Others are on the lookout for cloud options the place knowledge by no means leaves their world, and others are on the lookout for the 'stained glass' strategy that can guarantee a stage of knowledge obfuscation on the way in which out as a method to privateness safety. There’s a nice architectural maturity in how AI methods are carried out, so organizations ought to search to develop these capabilities earlier than making use of to public implementations,” he added.

How companions ought to search to have interaction the Australian authorities relating to AI

These inner tensions additionally have an effect on the companions of Australian authorities companies. The urge for food is there for AI, however getting the cut-through and serving to to implement options means understanding that austerity in authorities budgets is unusually lengthy and conservatism concerning the potential penalties is extra delicate than which may very well be the case in different sectors.

“The following steps that the federal government may take will most likely be a lot slower than maybe a number of the business counterparts, as a result of their urge for food for danger should be totally different,” stated Lacheca.

For IT professionals working in and in collaboration with authorities companies, Gartner's recommendation on get forward with AI boils all the way down to with the ability to display a fast ROI with minimal danger to public knowledge and the interplay. Companions who can ship this shall be in a powerful place when the subsequent authorities begins to speed up adoption to fulfill its longer-term ambitions.